[Research] In-situ study of a mobile command centre for large interactive displays

Last update: 24-Jan-2016

Most research of large interactive displays are focused on work and learning. Yet these displays are very versatile and are perfect for information dissemination. This is a project I did with my supervisor and a masters student in 2010-2011, investigating the feasibility of installing a large interactive display in a mobile command centre.

Most research of large interactive displays are focused on work and learning. Yet these displays are very versatile and are perfect for information dissemination. This is a project I did with my supervisor and a masters student in 2010-2011, investigating the feasibility of installing a large interactive display in a mobile command centre.

A little bit of background here. Whenever there is a large event, a lot of things can happen: lost child, injury, accidents, fights ...etc. At times like that a number of professionals are involved: regional police, medical support, and firefighters, to name a few, and a central hub coordinating human resources is needed so that help can be efficiently deployed. At the Waterloo region there is such a team doing just that: WR-React. WR-React is a team of local volunteers playing a liaison role to provide emergency response and facilitate the coordination of other professionals.

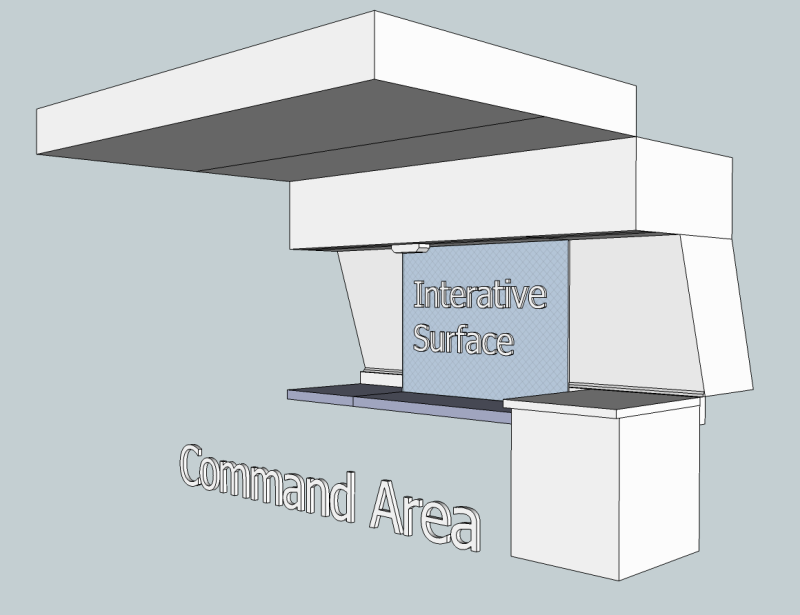

When there is a large event, for example, a fund-raising marathon, or a festive parade, WR-React will setup its mobile command centre at a vantage point to coordinate various resources with the organizers as well as the professionals. The problem they had, was they could only rely on walkie-talkies to communicate the whereabouts of events and their agents. This project aims as mitigating this problem using large interactive displays.

To ensure the design complies with their workflow, we carried out an in-situ study looking at how WR-React worked. This was part of the Contextual Inquiry methodology where the researchers went to the workplace and gained insights of the procedures, breakdowns, culture of the work. We used open-coding and took field notes, and used affinity diagramming to establish design requirements. The end result was an application that automatically tracks the agents' locations via GPS and display them on a display. Unfortunately the current technology didn't allow a display of the envisioned scale inside the tightly-spaced mobile command centre, and we instead installed the application in a PC monitor.

Nevertheless, a few publication was made based on our findings (listed below), and hopefully in the near future when the technology is ready the large interactive display envisioned can be installed.

Another nice outcome of this project was it inspired me with the idea of creating large interactive displays' interfaces that are immediately apprehensible for novice users, which led to my thesis research.

When there is a large event, for example, a fund-raising marathon, or a festive parade, WR-React will setup its mobile command centre at a vantage point to coordinate various resources with the organizers as well as the professionals. The problem they had, was they could only rely on walkie-talkies to communicate the whereabouts of events and their agents. This project aims as mitigating this problem using large interactive displays.

To ensure the design complies with their workflow, we carried out an in-situ study looking at how WR-React worked. This was part of the Contextual Inquiry methodology where the researchers went to the workplace and gained insights of the procedures, breakdowns, culture of the work. We used open-coding and took field notes, and used affinity diagramming to establish design requirements. The end result was an application that automatically tracks the agents' locations via GPS and display them on a display. Unfortunately the current technology didn't allow a display of the envisioned scale inside the tightly-spaced mobile command centre, and we instead installed the application in a PC monitor.

Nevertheless, a few publication was made based on our findings (listed below), and hopefully in the near future when the technology is ready the large interactive display envisioned can be installed.

Another nice outcome of this project was it inspired me with the idea of creating large interactive displays' interfaces that are immediately apprehensible for novice users, which led to my thesis research.

Related publications:

[1] Cheung, V., Cheaib, N., Scott, S.D. (2011). Interactive Surface Technology for a Mobile Command Centre. In Extended Abstracts of CHI2011: ACM International Conference on Human Factors in Computing Systems. Vancouver, BC, May 7-12, 2011, pp. 1771-1776.

[2] Cheaib, N., Cheung, V., Cerar, K., Scott, S.D. (2011). A Multi-Agency Collaboration and Coordination Hub (MACCH). Poster Presentation at Graphics Interface 2011. St. John's, NF, May 25-27, 2011.

[3] Genest, A.M., Bateman, S., Tang, A., Scott, S., Gutwin, C. (2012). Why Expressiveness Matters in Command & Control Visualizations. Presented at the Workshop on Crisis Informatics at CSCW 2012: ACM Conference on Computer-Supported Cooperative Work, Seattle, WA, February 11, 2012.

[1] Cheung, V., Cheaib, N., Scott, S.D. (2011). Interactive Surface Technology for a Mobile Command Centre. In Extended Abstracts of CHI2011: ACM International Conference on Human Factors in Computing Systems. Vancouver, BC, May 7-12, 2011, pp. 1771-1776.

[2] Cheaib, N., Cheung, V., Cerar, K., Scott, S.D. (2011). A Multi-Agency Collaboration and Coordination Hub (MACCH). Poster Presentation at Graphics Interface 2011. St. John's, NF, May 25-27, 2011.

[3] Genest, A.M., Bateman, S., Tang, A., Scott, S., Gutwin, C. (2012). Why Expressiveness Matters in Command & Control Visualizations. Presented at the Workshop on Crisis Informatics at CSCW 2012: ACM Conference on Computer-Supported Cooperative Work, Seattle, WA, February 11, 2012.